Using LocalStack to test AWS services locally

LocalStack is a cloud service emulator that runs in a single container on your machine or within your CI environment.

With LocalStack, you can run your AWS applications or Lambdas entirely on your local machine without connecting to a remote cloud provider!

Whether you are testing complex CDK applications or Terraform configurations, or just beginning to learn about AWS services, LocalStack helps speed up and simplify your testing and development workflow.

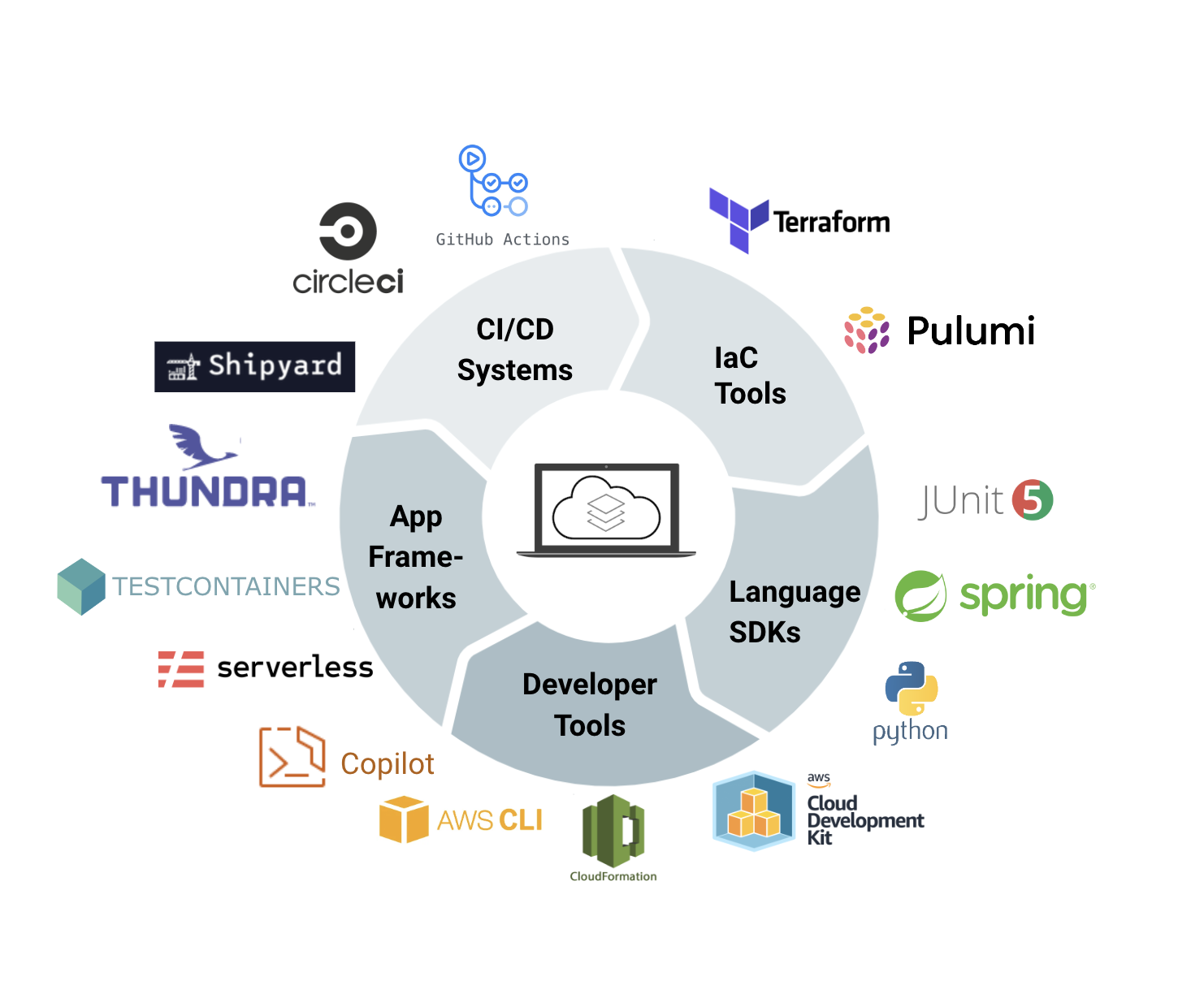

Integrations

Below are some of the tools and services in which LocalStack can be integrated into. One of the key usages for LocalStack is to be able to run integration tests on CI/CD pipelines without throttling any cloud implementations you may have.

Setting up LocalStack

To configure LocalStack you will need to create a project folder containing a docker-compose.yml file for which the configuration will be contained.

Below I have provided a docker-compose snippet which contains the LocalStack configuration and Elasticsearch:

version: "3.9"

services:

elasticsearch:

container_name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:7.10.2 # Max version supported by LocalStack

environment:

- node.name=elasticsearch

- cluster.name=es-docker-cluster

- discovery.type=single-node

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ports:

- "9200:9200"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- data01:/usr/share/elasticsearch/data

localstack:

container_name: "${LOCALSTACK_DOCKER_NAME-localstack_main}"

image: localstack/localstack

ports:

- "4566:4566" # Edge port

depends_on:

- elasticsearch

environment:

- DEBUG=1

- OPENSEARCH_ENDPOINT_STRATEGY=port # port, path, domain

- DOCKER_HOST=unix:///var/run/docker.sock

volumes:

- "${LOCALSTACK_VOLUME_DIR:-./volume}:/var/lib/localstack"

- "/var/run/docker.sock:/var/run/docker.sock"

volumes:

data01:

driver: local

For this demo I will be using S3, Lambda and Elasticsearch, as you can see above I am not specifying any image for S3 or Lambda. These are automatically provisioned within the LocalStack image and hosted on edge ports, this allows multiple services to run on the same port i.e. 4566. The reason Elasticsearch is a separate image is that its a separate service but piggybacks onto LocalStack.

This can run up in Docker by running the following command in the folder containing the docker-compose file.

docker-compose up

This is all that is required to configure LocalStack, if you need to tailor it further head over to https://docs.localstack.cloud/user-guide/ for more guidance.

Demo

Now that you have the services up and running within your local docker instance, we can now start looking at using them to perform tasks exactly how you would if they were hosted in the cloud.

For this demo you will need the below tools installed (This is tailored to MacOS users who have homebrew installed. But this does not mean you cannot follow along as there are alternatives for Windows or Linux users.)

Key tools required:

-

Node.js (brew install node)

-

LocalStack (brew install localstack)

-

Python (brew install python) this is required to install awscli-local

-

awscli-local (pip install awscli-local) this avoids having to define the endpoint-url on each command

Creating a Lambda function

Within the same project folder as your docker-compose, create a new directory to house the files for your Lambda function. Open a terminal and cd into that directory and run:

npm init

This will launch a wizard to create the package.json for your Lambda. Accept all the defaults when running through the wizard as it doesn’t really matter, it is only used to pull the packages.

Once created run:

npm install aws-sdk

This will install the aws-sdk package for the lambda so that it can consume the services hosted within LocalStack.

Create an index.js file containing the below code:

const aws = require('aws-sdk');

const s3 = new aws.S3({

apiVersion: '2006-03-01',

endpoint: `http://${process.env.LOCALSTACK_HOSTNAME}:4566`, // This two lines are

s3ForcePathStyle: true, // only needed for LocalStack.

});

module.exports.handler = async event => {

// Get the object from the event and show its content type

const bucket = event.Records[0].s3.bucket.name;

const key = decodeURIComponent(event.Records[0].s3.object.key.replace(/\+/g, ' '));

const params = {

Bucket: bucket,

Key: key,

};

try {

const { ContentType } = await s3.getObject(params).promise();

console.log('Lambda executed outputting content-type')

console.log('CONTENT TYPE:', ContentType);

return ContentType;

} catch (err) {

console.log(err);

const message = `Error getting object ${key} from bucket ${bucket}. Make sure they exist and your bucket is in the same region as this function.`;

console.log(message);

throw new Error(message);

}

};

The above code will check the S3 bucket and output the content-type of a dummy file that we will push into the bucket.

Now that we have a Lambda function we now need to package the directory into a zip file so we can upload it to the Lambda service.

Once packaged there are a couple of other files required to get this demo running, the first is a dummy txt file and the second is a json file to setup an event trigger.

Create a dummy.txt file within the root of your project.

Create a json file within the root of your project called ‘s3-notif-config.json’ and paste the below code into that file:

{

"LambdaFunctionConfigurations": [

{

"Id": "s3eventtriggerslambda",

"LambdaFunctionArn": "arn:aws:lambda:eu-west-1:000000000000:function:my-lambda",

"Events": ["s3:ObjectCreated:*"]

}

]

}

Running the demo

Firstly, we will create the lambda function using the below script:

awslocal \

lambda create-function --function-name my-lambda \

--region eu-west-1 \

--zip-file fileb://lambda.zip \

--handler index.handler --runtime nodejs12.x \

--role arn:aws:iam::000000000000:role/lambda-role

Ensure your terminal is pointing to the directory in which your .zip file is held.

Next, we will create a bucket within S3 to hold the dummy txt file.

awslocal s3 mb s3://my-bucket --region eu-west-1

We will also push the notification configuration we’ve created earlier so that the Lambda is executed when an item is pushed to the bucket.

awslocal \

s3api put-bucket-notification-configuration --bucket my-bucket \

--notification-configuration file://s3-notif-config.json

Next we need to run up Cloudwatch Logs to monitor the execution of the Lambda when we push a file to the S3 bucket.

awslocal --region=eu-west-1 logs tail '/aws/lambda/my-lambda'

Lastly, we can now push the dummy txt file to the bucket and watch the logs to see the Lambda executed and output the content-type.

awslocal \

s3api put-object --bucket my-bucket \

--key dummyfile.txt --body=dummyfile.txt

Summary

This is just a small piece of the puzzle to what LocalStack has to offer. The key take away from this is that with LocalStack you can prototype software solutions without drumming up a bill on cloud services. It also gives you the flexibility to play with the services before committing to migrating to the cloud.